Twitter today followed up on its March request for proposals to measure the health of its network with announcements of a new approach to how it will handle abuse on its platform.

In a statement written by VP of Trust and Safety, Del Harvey, and Director of Product Management, Health, David Gasca, it was noted that while less than 1% of accounts make up those reported as abusive, Twitter acknowledges they still "have a disproportionately large -- and negative -- impact on people’s experience."

To address that, Twitter says it's taking action to curb content that misrepresents and distracts from larger, important conversations -- by measuring the behavior of actors and users who intend to share it.

Twitter to Measure New Behavioral Signals

The Signals

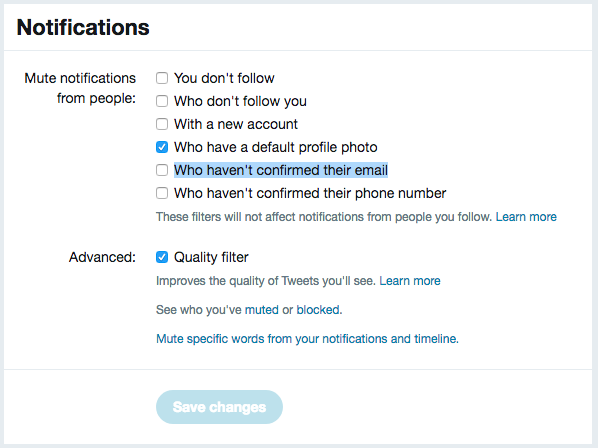

The behavioral signals Twitter will measure -- which it says are not all externally visible -- include flagging accounts without a confirmed email address. Blocking notifications or mentions from accounts of this nature is an option in the network's user settings, along with several other criteria.

Additionally, Twitter will flag instances of a single individual signing up for multiple accounts in a short period of time (or at the same time), as well as accounts that habitually Tweet to other accounts that don’t follow them back.

Twitter also says it will institute new practices to detect indications of "coordinated attacks" on its site, as well as ways to measure the behavior of accounts that violate standards and the way they engage with each other.

What the Signals Will Do

The point of these behavioral measurements is to be proactive -- to help Twitter detect abuse on its platform before users have to report it themselves.

Ultimately, the signals will determine the way Twitter synthesizes and displays content to users in ways that are public across the network -- like visible conversations among users, as well as search results.

The tricky part, however, is that these behaviors and the content that often comes with it don't directly violate Twitter's standards. The company, therefore, can't completely remove it -- or so the statement suggests.

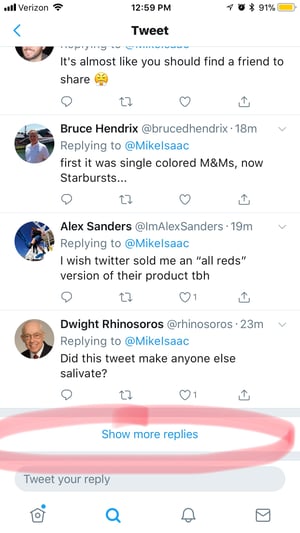

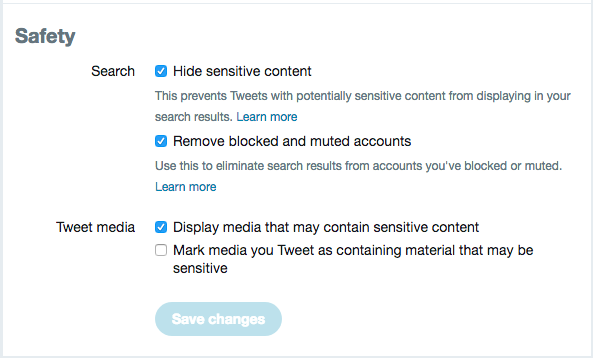

So, while the content will remain live, Twitter will make it harder to find. For example, in order to see it, users will have to click "Show more replies" at the bottom of threads to see such content, or change their settings to see all search results.

The Results

The outcome, Twitter hopes, is a higher visibility of (and engagement with) what it describes as "healthy conversation."

Twitter has been testing these signals in various global markets, seeing such results as a 4% decrease in abuse reports from search, and an 8% decrease in abuse reports from conversations and threads.

At the same time, however, Twitter says there's still a long road ahead to fully addressing the health of the network.

"We’ll continue to be open and honest about the mistakes we make and the progress we are making," write Harvey and Gasca. "We’re encouraged by the results we’ve seen so far, but also recognize that this is just one step on a much longer journey to improve the overall health of our service and your experience on it."

No comments:

Post a Comment