Over the past two years, social media networks have made no secret of their efforts to fight the spread of spam on their sites.

It largely began when it was revealed that foreign actors had weaponized Facebook to spread misinformation and divisive content in hopes of influencing the 2016 U.S. presidential election.

It was then revealed that Facebook was not alone in that phenomenon -- and that some of its fellow Big Tech peers, like Twitter and Google, were also being leveraged by the same or similar foreign actors to influence the election.

That's prompted these companies to take action -- publicly.

Facebook has released a number of statements since this revelation about its efforts to emphasize news from "trusted sources" and change its algorithm to focus on friends and family. Twitter released an request for proposals to study the "health" of its network and began sweeping account removals. YouTube, which is owned by Google, made its own efforts to add more context to videos on its platform.

We’re committing Twitter to help increase the collective health, openness, and civility of public conversation, and to hold ourselves publicly accountable towards progress.

— jack (@jack) March 1, 2018

So, how have these efforts been paying off -- and are users noticing them?

According to our data, the survey largely says: No. Here's what we found.

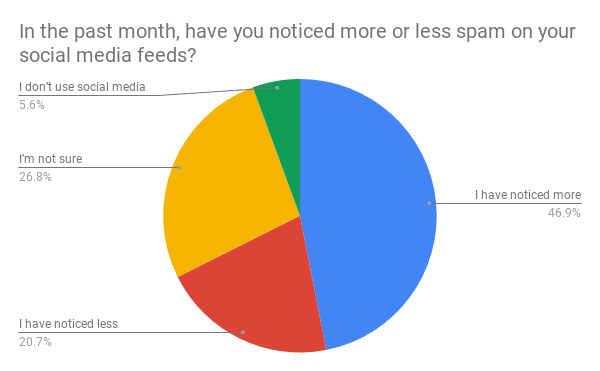

47% of Social Media Users Report Seeing More Spam in Their Feeds

Seeing Spam

The controversy surrounding social media networks and the way they manage, distribute, or suppress content shows no signs of slowing down. Just this week, the U.S. House Judiciary Committee held a hearing -- the second one this year -- on the "filtering practices" of social media networks, where representatives from Facebook, YouTube, and Twitter testified.

But for all the publicity around these tech giants fixing the aforementioned flaws (and answering to lawmakers in the process), many users aren't reporting any improvement.

In our survey of 542 internet users across the U.S., UK, and Canada, only 20% reported seeing less spam in their social media feeds over the past month. Nearly half, meanwhile, reported seeing more.

Data collected using Lucid

That figure could indicate a number of things. First, it's important to note that what constitutes spam is somewhat subjective. Facebook, for its part, defines spam as "contacting people with unwanted content or requests [like] sending bulk messages, excessively posting links or images to people's timelines and sending friend requests to people you don't know personally."

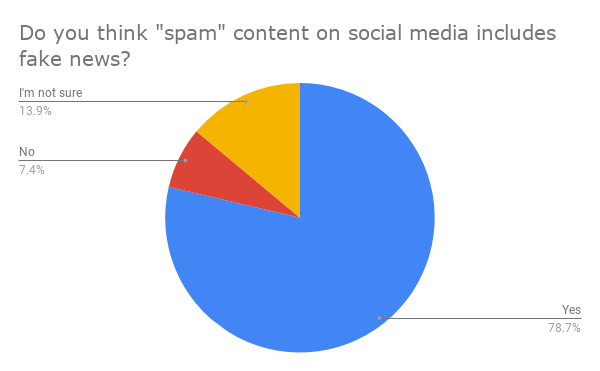

Something like fake news, for instance, does not seem to fall under that definition -- but according to our research, about 79% of people seem to think that it generally counts as spam.

Data collected using Lucid. Survey sample = 375 internet users from the U.S. and Canada.

Facebook has struggled to define "fake news," despite CEO Mark Zuckerberg's best efforts in his congressional hearings and a recent interview with Kara Swisher on Recode Decode.

Recent reports have also emerged that Facebook content moderators are sometimes instructed to take a "hands-off" approach to content that some might consider spam, according to The Verge -- such as "flagged and reported content like graphic violence, hate speech, and racist and other bigoted rhetoric from far-right groups."

That contrasts some testimony from Facebook's VP of Global Policy Management Monika Bickert at this week's hearing, as well as much of what Zuckerberg recounted in his interview with Swisher.

The company has previously spoken to the subjectivity challenges of moderating hate speech, and released a statement how it plans to address these reports.

However, the recent findings that content moderators are instructed not to remove content that leans in a particular political direction -- even if it violates Facebook's terms or policies -- contradicts the greater emphasis the company has said it's placing on a positive (and safe) user experience over ad revenue.

The Fight Against Election Meddling

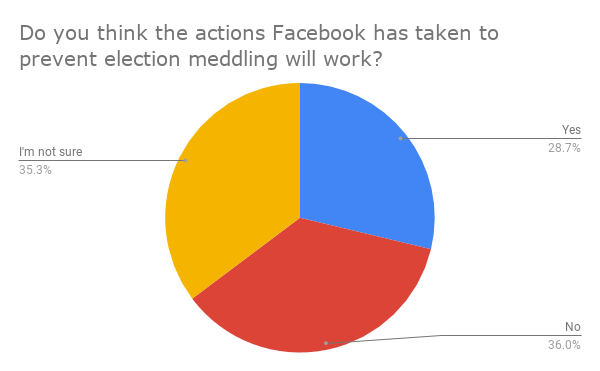

We also ran a second survey -- of of 579 internet users across the U.S., UK, and Canada -- to measure the public perception of Facebook's specific efforts to fight election meddling.

Here, respondents indicated a bit more confidence, with about 28% reporting that they think the company's battle against the use of its platform to interfere with elections will work.

Data collected using Lucid

But almost as many people who believe the efforts are futile also seem to be uncertain -- which could indicate a widespread confusion over what, exactly, the company is doing to prevent the same weaponization of its platform that previously took place.

It's an area where Facebook fell short, Zuckerberg told Swisher, because it was "too slow to identify this new kind of attack, which was a coordinated online information operation." To be more proactive, he -- and many of his big tech peers -- have identified artificial intelligence (AI) systems that can identify and flag this type of behavior quicker than human intervention can.

But AI is also widely misunderstood, with some reports of people fearing it without knowing just how frequently they use it day-to-day. That lends itself to a degree of indecision over whether -- and if -- Facebook and its social media counterparts will be successful in its heavily AI-dependent efforts to curb this type of activity.

One item to consider when it comes to both surveys and their corresponding results is the aspect of salience. Right now, the potential misuse of social media is top-of-mind for many, likely due to the prevalence of hearings like the one that took place this week and its presence in the news cycle.

That could sway the public perception of spam -- and how much of it they're seeing -- as well as the efficacy of social media's fight against election interference.

When HubSpot VP Meghan Anderson saw the data, "my first reaction was that people just have a heightened awareness of it at this moment."

"It's part of our global dialogue," she explains. "There are apology ads running on TV. The impact of misinformation and spam is still setting in."

But with the issue of prevalence and clarity also comes the topic of trust.

"What I think the data does show," Anderson says, "is that when you lose someone's trust, that distrust lingers for long after you've made changes to remedy it."

No comments:

Post a Comment